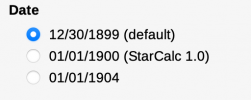

You are probably the only programmer in the world who has not be bitten badly by start date issues. Here's a simple test. Create an Excel spreadsheet on an old Windows machine. Add several columns that are formatted as dates. Then move the same spreadsheet over to excel on a Mac. You will notice all the dates are wrong.

Oh, don't get me wrong, I do get the issue (I misunderstood it at first based on the initial description but Rene clarified it). It's just got to do with old versions of Excel and using a different epoch. I just have never ran into it because I don't use spreadsheets for anything that I expect to keep, care about, or look at after two weeks (that's not an insult at people who do but just me describing my use cases). Excel and spreadsheet software is mostly geared for doing short-term analysis and data storage / transmission (e.g. financial statements). Using it as any kind of long-term durable data storage mechanism is fraught with peril (as you noted). For that kind of stuff, I would use a database or plain-text (something which has been popularized and evangelized since the 1990's with the start of the Agile movement).

Or take a very old Lotus 123 sheet that has been migrated for years, it will give 3 differnet dates for date fields on older Excel versions, newer ones, older mac Excel and newer mac Excel. Take a spreadsheet from a Linux system and try to read it in Excel on a mac or windows and none of the dates will be correct UNLESS you specifically set up the start date for that sheet to be what matches the target system.

Now, take a spreadsheet from a system from someone in Canada, whose dates were stored in a different format than US based dates. But if you don't know that and try to turn them into something you can use you will find things really off.

The Mac to Windows conversion is over a year difference. Every time we have a leap year the Windows system gets further off by a day since Windows Excel does not calculate leap years properly for 00 ending years.

Define many years? I have data in spreadsheets that started out on Data General machines from the early 1980's . I have some that are the descendents of Lotus 123 sheets, a few from a bunch of different flavors of Unix and some from some IBM mainframes and Sun Microsystem machines including a SPARC station. One I have to deal with regularly, as in 2-3 times a year, is a spreadsheet that is from a COMPAQ Alpha station XP1000 running Digital Unix with 2 gigabytes of memory. It's still in use to this day and it's a total PITA, made even worse by the fact it's in Australia and they don't like to deal with anything outside their 2 approved data input packages which store files in Excel 97 format. (Which has all the aforementioned date issues.)

Yeah, it sounds like you deal with a lot of legacy stuff. That's an unfortunate reality of migrating information across so many applications and systems, it just gets messier and messier over time. Heck, let me tell you all about the joys of migrating source control platforms and technologies ... whew boy.

Personally, I don't use anything legacy at all anymore and won't work on anything legacy either. Which to be clear, for me, I define legacy as anything prior to 2010 (I know, I know ... that's barely yesterday). I just don't have the time, energy, or interest in putting up with that kind of ancient voodoo. Unless I am paid extremely well, I won't touch stuff from the dawn of time itself (i.e.

anything from the 20th century). The ONLY exception is Emacs but that's another story since it's actively maintained and is nothing like it was all those years ago.

Regarding formats and parsing, yep. I am no stranger to that either. It gets fun when I have to mix and match between different programming languages that have different defaults too. As I mentioned elsewhere on the forum, this is why I standardized years ago to follow formats like ISO-8601. Even for my professional work, programming languages largely standardized their date time libraries and well documented all of the "gotchas" and eccentricities.

Couple that all with the fact that I don't cross OS boundaries all that often anymore as I simplified my OS usage several years ago and went onto macOS for everything. However, even when I do use Windows, I just emulate Unix as the baseline/standard (i.e. I emulate it via tools like Cygwin and MSYS2 on any Windows machines I would use).

That is why I store all dates and times as strings, not date time fields.

Dates are the bane of my programming existence.

Times run a close second especially if you attempt to handle daylight savings times which have changed over the years. Even just timezones are a problem.

Well, storing as strings is just trading one set of problems for another: parsing. Dates and times are just plain old integers under the hood, really. It's usually easier to work with them in that format than strings (though six of one and half a dozen of the other). Though, I digress, I do understand the frustration. I won't write my own date time libraries anymore because it's just too much work/effort for all of the reasons discussed. I admire the folks that wrote great libraries like the chrono library for C++, Java's/.NET's entire standard library, moment.js/day.js for JS/TS, etc. It's thankless work but necessary.

BTW, since you brought it up, dates are

trivial, absolutely easy, compared to time and time zones. Not only are they political constructs (international/federal laws, state laws, local laws, tribal laws, etc. make this just

delightful ... looking at you Arizona and Indiana) but they are geographically

delightful too (yeah, I am talking about the fact that cell towers can just be

fun to deal with).

Dates are the bane of my programming existence.

Date and time are worse than dealing with printing issues. At least printing can usually be solved with enough $ to just go buy a new printer.

I don't use printers anymore, so I dodge that bullet too.

Maybe once a year ...

maybe. I am 100% on the "digital everything" train.

My biggest problem in programming is actually less about any tactical thing like dates and times, null, or data storage formats. It's scaling it all up to meet the needs of super-enterprises (my own coined term: really big enterprises) and infrastructure now of days. Getting everything to talk to each other, scaling it all for performance and cost, and being on the cutting edge of everything (i.e. very hard to google my way out of problems). That's a story for another time though.